Goodbye Colocated Data Centers: How We Moved Postmark to AWS

Last year, Postmark celebrated its tenth anniversary. In 2010, Postmark was built using the best tools and methodologies available, but over the years, it had accumulated many responsibilities that weren’t anticipated when launched.

Additionally, as many readers will remember, the landscape and methods for building software substantially evolved over those 10 years:

- Async web frameworks were in their infancy.

- Docker didn’t exist.

- jQuery was the de facto way to build interactive web apps.

- 12 Factor App hadn’t been presented.

- “Infrastructure as code” didn’t exist.

- “Serverless” hadn’t been coined.

Today, I’d like to share some details about one of the largest projects our team has ever embarked on—moving from our colocated data center to AWS—with some of the lessons we learned on this journey and tools we used to be successful.

Part I: The journey begins #

Make the next right decision #

As with most early-stage apps, you have to make trade-offs to validate the product quickly, find product-market fit, deliver core functionality, and defer "unsolvable” problems until the product can reach escape velocity... there’s a reason we call it launching the product.

The Postmark team was able to navigate all of these challenges to build a product that is beloved by customers—but, as products mature, the trade-offs that are made early need to be addressed. To be clear, this is a natural part of building software products, and all successful products make these trade-offs (does anyone remember Twitter’s “fail whale”?).

At our company retreat in 2018, we identified that Postmark needed a significant architectural re-think to ensure we could deliver a consistent and reliable experience to our customers for the years to come.

The core goals we outlined included:

- Architecture that allowed us to scale horizontally as we grow.

- Reduced dependence on traditional physical infrastructure.

- Better processes to predictably scale services as the customer base grows.

- Improved observability and monitoring to reduce over-alerting to the team.

- Increased confidence when shipping updates to customers.

During that retreat, we made a few major decisions that set our journey into motion:

- We would migrate all blob content out of our primary MySQL and Elasticsearch data stores into S3.

- We would begin refactoring our “distributed monolith” into self-contained services that adhered to the guidance outlined in the 12 Factor App framework.

- We would adopt a “touch it, fix it” mindset: any service that needed enhancement would be reviewed and refactored to meet the goals we outlined above.

These decisions fundamentally changed how we viewed our work to improve Postmark.

More cloud, less fog #

We’re a small team that wants to build a great product, but building out physical infrastructure requires lead time, constant care, and actual humans to physically do things.

As software engineers, we want to minimize the number of people and amount of communication that is necessary to deliver Postmark to customers. Referring back to the goals we set for ourselves, two related directly to our ability to meet the capacity demands of a growing customer base:

- Reduced dependence on traditional physical infrastructure.

- Better processes to predictably scale services as the customer base grows.

These goals are what the cloud is built for. There is no doubt that moving to any public cloud provider costs more than operating in a traditional colocated facility, but we reasoned that this would allow us to better respond to customer growth, and give our small team the power to select the right tools for particular workloads and reduce coordination overhead in improving the product.

We’ve been fully in AWS for a year, and I’d assert that all of the above has happened.

So I’d like to talk a little more about the strategy we took to accomplish this move, pacing ourselves, and de-risking the transition. In the end, we were able to achieve a seamless move from our colocated data center to AWS, with a one-hour maintenance window, and zero support tickets.

Baby steps #

The fact that we’ve moved to AWS from colocated hosting is not particularly unique in its own right. Many companies have made successful transitions, or are in the process of doing so. However, I do think our approach allowed us to gain traction and demonstrate continuous improvements, both for our team and our customers.

I liken our approach to moving. I’ve lived in several apartments and homes over the years: each time, I’d pack all of my belongings, move them tens or hundreds of miles, and then unpack them and decide I didn’t want to keep some of the items.

This is not an efficient way to move—and if time and organizational support allow for it, not how workloads should be moved to the cloud, either.

At Wildbit, we have the benefit of a management team that supported our efforts to look at each component, evaluate the current and ideal states for it, and then refactor the component as we moved it to AWS. For the vast majority of our migration, we took this approach.

This careful review and refactor work gave us the following benefits:

- We’d have better visibility into whether things were “working” when they were moved.

- We could more accurately predict costs, and do some optimization to manage them as we moved.

- We’d gradually reduce the severity/number of alerts and service issues across Postmark: each refactored service reduced the stress of operating the system.

- We’d grow our understanding of AWS services incrementally. We didn’t need to learn the ins-and-outs of all of the services we intended to use in one go.

- We could assess and update services to conform to the guidelines in 12 Factor App.

- We would reduce and quantize many of the risks associated with a migration to the cloud.

- We could choose aspects of the system that were the biggest pain points (or the simplest to move) first, allowing us to demonstrate value early.

It’s important to note: this approach takes more up-front time than “lift-and-shift” strategies, but is also more predictable and less chaotic than the alternatives. Our small team was able to migrate one or two services per quarter, but we achieved a good cadence of releases that regularly delivered reliability and performance gains. These gains compounded as additional services transitioned.

Traction #

Great, we’re going to the cloud. Where should we start?

Some history: Like most apps starting out, Postmark was built centered around a few core databases. These served us well but were very difficult to scale, and represented capacity/availability risks.

I’m also going to level with you: We were using MySQL as a queue. All mail we processed would be written to MySQL, processed, and then archived in Elasticsearch.

In spite of the fact that this is not considered a best practice, it was simple, and it served us well for 8 years—we made numerous improvements like switching to NVMe drives, more RAM, more cores, but these are really just bandaids if you can see your product growing.

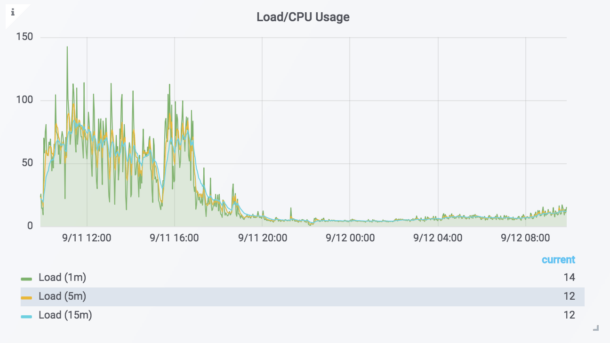

We knew that these I/O intensive operations were becoming a bottleneck for MySQL, but our first major change of moving blob content to the cloud (S3) showed astonishing results:

Immediately following the release of the S3 refactor, we saw peak MySQL load drop by more than 8x, which created more room for us to work and take bigger risks in other areas of the system.

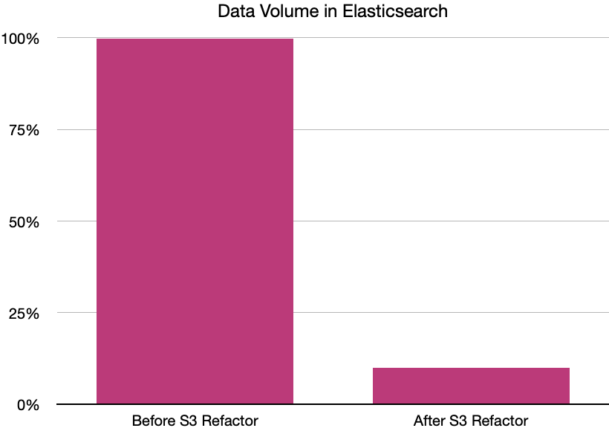

As we fully adopted S3 as our blob storage, we achieved a 90% reduction in data stored in our Elasticsearch cluster.

A dramatic re-enactment of the data reduction:

So, like, a lot.

What’s more, we knew the benefits and could demonstrate the cost savings when it came to sizing a new Elasticsearch cluster in AWS. Moving, then optimizing, would have made the cluster in AWS 10x more expensive and substantially complicated the process of migrating our on-premises cluster to AWS.

As a side note, this example also demonstrates a major reason for democratizing access to resources. A blob store was always the best place for this content, but due to the limited size and resources of our team, the path of least resistance was to push it to the data stores we already had running.

Emboldened by our S3 success, we started to look at other workloads that would benefit from moving to the cloud. Although we initially discussed converting our windows services to 12 Factor App (12FA) processes, in October 2018 we started to look seriously at AWS Lambda. Many of the principles defined in 12FA still apply to Lambda stacks, but this was the first of many times when we revised our approach as we learned more.

The first service to move to Lambda was the one that processes webhooks. This had been a perennial source of stress for us, as we couldn’t easily scale up concurrency, and our customers’ endpoints could create back pressure that would impact the timeliness of web hook delivery for all customers. The nature of serverless processes, and Lambda in particular, felt like a good match for a workload that is dynamic and highly concurrent.

We set out to refactor webhooks into a dedicated Lambda stack in AWS. Moving to the serverless stack allowed us to dynamically scale the system in seconds, while completely isolating webhook processing from all other resources within Postmark. This provided both operational and security wins compared to our previous system.

Additionally, when the webhooks system was released, we were able to concurrently review and define more appropriate service health metrics to detect problems using structured logging and ingesting additional CloudWatch metrics into Prometheus; this contributed to a shared understanding on our team of what “working” looked like for this service (what “normal” looked like, as well as when to react, scale, or escalate when things weren’t “normal”).

Here’s an example dashboard for this system. We have top-level metrics, and then get more granular to allow for pin-pointing bottlenecks or issues in this service:

At this point, it is worth mentioning that although we strive to make Postmark simple to approach for our customers, internally, it is a complex system with many moving parts.

Still waters run deep.

Over the last two years, we have progressively migrated and isolated many services that support all of the features that Postmark provides. A sampling:

- Webhooks

- Bounce processing

- Statistics

- Mail Processing/Sending

- Engagement Tracking

- Outbound Activity/Delivery Event Processing

- Suppression List Management

- Inbound Mail Processing

- Billing

- User Interface

- API

- Web App

- More secret sauce…

Using the playbook we had developed for webhooks, many of the above services were refactored to Lambda stacks that have well-defined service boundaries, are highly concurrent, and in many cases, idempotent. Frequently, these refactors also encompassed changes required to support Message Streams.

The big takeaways #

As engineers, we often talk about paying down <scare quotes>Technical Debt</scare quotes>, but it is worthwhile to think about Technical Dividends, too.

With each refactored service, we addressed the associated reliability risks and operational problems that were creating stress for our team and distracting us from the next steps. This is a slow process, and the results aren’t always immediately apparent, but persistence is key.

Team velocity will improve, things will get better.

We prioritized the areas that were creating the most stress and distraction to migrate, first, and each release made future work easier, building our confidence.

It’s also easy to focus on number of workloads left to move as a gauge for measuring progress during a cloud migration, but it’s the wrong metric: it prioritizes doing work over outcomes. We centered the way we measured progress around reliability, stress levels for the team, and longer-term objectives of being able to build more features and a more reliable product with the same size team. That focus allowed us to make choices that would have more lasting impact than “lift-and-shift.”

Part II: Getting a grip #

Migrating Postmark to AWS was the largest project the team has ever embarked on. We set out with several high-level goals, but two in particular required specific tools and techniques that I want to cover below:

- Improved observability and monitoring to reduce over-alerting to the team.

- Increased confidence when shipping updates to customers.

You can’t fix what you can’t measure

#

At Wildbit, we have historically used tools like Librato, New Relic, Bugsnag, and others to capture information about the health of our systems. Each of these has a place, but getting a high-level view of system health is challenging with the information in so many different systems. In July 2018, we adopted Grafana & Prometheus to help us get a handle on where the problems were.

In our case, we saw immediate benefits by collecting metrics related to our app’s shared resources: MySQL, Elasticsearch, RabbitMQ, etc. These were easy to add and paid dividends right away. Monitoring queue depths and behaviors is a great proxy for detecting (the existence of) problems in a distributed system. These kinds of metrics are always valuable, but can be a good starting point in apps that do not yet have good observability.

Prometheus also gave us a way to easily add appropriate monitoring and alerting to all new components as they were released, which allowed us to gradually gain confidence in deployments and detect regressions, rapidly. Our Prometheus configurations are source-controlled and continuously deployed, making the process of adding metrics/alerts easy for all engineers to contribute as they release updates. It has been our experience that it’s substantially easier for an engineer to identify key metrics during development than it is to heavily instrument the code retroactively.

“Predicting” the future #

As with many apps, Postmark’s load is somewhat cyclical. Comparing “now” to the same timeframe last week or last month has allowed us to calibrate our expectations and see “what changed”—Grafana and Prometheus make this type of review trivial.

It is also hard to understate the value of being able to look back a week or month to see what “normal” looks like when determining the severity or importance of a change. Grafana and Prometheus make this kind of information trivially easy to digest.

For example, in the following chart, we can compare our Bounce Processing Rate to 4 weeks ago. This is a powerful way to quickly understand if there was significant change in system/customer behavior:

Another key factor in our decision to use Prometheus was the ability to compose complex alerting rules from any of the metrics that have been collected. This allowed us to tune our alerting so that we could substantially reduce the number of nuisance pages. The thresholds we chose in this process can be considered an “internal SLA”—it’s the standard to which we hold ourselves accountable.

Grafana and Prometheus gave us the insight we were missing to make big changes. Seeing utilization graphs go “down and to the right” when we made progress was heartening and gave us the energy to continue.

The move to Prometheus also made exposing metrics very easy. As a result, adding metrics, dashboards, and alerting rules is now a core part of our process each time we deploy a new component.

Repeatability & safety #

While Prometheus and Grafana gave us the power to detect when the system changed, we also adopted tooling and processes that allowed us to better manage and understand how our system was changing.

First, a quick note: It is fairly common for early-stage products to be built in an ad hoc fashion, free of a lot of the tooling and processes that are required to operate more mature products. The key decision to make is at what point this additional structure and overhead should be applied:

- Too early, and the product will be stuck in a quagmire of tooling before it has a chance to deliver real value to customers

- Too late, and development, QA, and operations teams will not be able to support the pace of change required to improve and grow the product.

Postmark is here for the long haul. In order to manage it properly, we wanted to make it easy for the entire team to make improvements but ensure those changes could be tested, applied safely, and repeated if necessary.

Our team has long been proponents of capturing processes and configuration in source control (Infrastructure as Code). Previously, we had used Chef for our products, and later adopted Ansible to better manage configuration across more of our systems. However, these tools only offer part of the automation solution.

In 2018, we adopted Terraform for provisioning resources in the cloud, to round out our automation story.

Terraform and Ansible have been game-changers for us. Due to the nature of these tools, we have a powerful and safe way to build and maintain resources in the cloud. Managing cloud resources with teams larger than a few people can create massive risk: a single wrong click could disrupt network traffic, drop databases, or a bevy of other problems, with no easy way to determine “what went wrong.”

Terraform and Ansible gave us a way to confidently evolve our infrastructure, as well as to recreate resources in a predictable manner in our testing environments. In our experience, “Infrastructure as Code” and automation tooling are essential to make a successful migration to the cloud.

Democratizing access #

Through the adoption of Prometheus, Grafana, Ansible, and Terraform, the team now has tools to easily provision, deploy, and manage resources in the cloud. This enables them to make better decisions, earlier in the development process, as well as see the results of their efforts.

While each of these tools has a learning overhead, they allow us to precisely communicate our intent and avoid “drift” or “works on my machine” problems that occur when configuration is managed in an ad hoc fashion. This has the knock-on effect of reducing human error, as well as contributing to shared knowledge so we can better operate Postmark as a team.

Part III: The journey’s end #

In the first two parts of this piece, we discussed some of the major changes we made to our process and systems to ensure a low-risk, low-disruption migration of Postmark to AWS.

In spite of all of our effort, one big scary beast remained: Moving our primary database to AWS. Now let me share what steps we took to de-risk this ordinarily scary process, and some final thoughts about our journey and what the team has accomplished.

Chopping the Gordian Knot #

There’s a common phrase that goes “The less you do, the more you can get done.”

In our migration, we took a great deal of time trimming the fat and optimizing before moving to the cloud. With each service we migrated, we evaluated how we could split up our primary monolithic MySQL database, delegating datasets and responsibilities to specific services, and new data stores.

We’re not there yet, but we have adopted an informal rule:

“The service that writes the data, owns the data.”

What this means is that the service writing the data is also the service we communicate with to retrieve it. This is a pretty common practice, but it’s easy to form bad habits or break this rule “just this once” when growing a product or to meet a deadline. This helps to establish clear boundaries between services, and break complex dependency graphs.

By splitting our monolithic database apart, we could more easily estimate the requirements for MySQL instances in AWS, and scale services independently.

A side takeaway here is that logical separation (different DBs on the same physical server) can go a long way towards making this scale-out/refactor easier when an app eventually grows. It’s a relatively cheap gamble to logically isolate DBs that can pay huge dividends when a product becomes successful.

The other “magic” we applied during this migration was to split reads and writes to our SQL cluster. This allowed us to better utilize our primary and replica instances to serve requests. For example, GET requests in our APIs can be served from any read replica—which have sub-second replication lag—while PUT/POST/DELETE requests are applied to the primary instance and are fully consistent.

Splitting reads vs. writes allowed us to build out our API in AWS reading from local resources instead of relying on a high-latency link to our colocation facility. As a side note, splitting reads vs. writes early in an app’s lifecycle can pay great dividends, but be careful, and make sure you understand the implications of CAP if you go this route!

By logically isolating datasets and spreading database traffic, we were able to reduce the overall risk in the final cutover from our co-located servers to AWS. This spread one “giant” event into many smaller events that could be conducted while the public-facing systems remained online.

The final boss #

Over the course of the AWS migration, our goals had been to improve operability and reliability of the Postmark systems while ensuring continuity of service for customers and reducing risk before moving our app fully to AWS.

Although we made a huge amount of progress and split workloads to “regional” replicas, some operations in Postmark still need to update the primary instance of our databases. As a result, we eventually needed to promote one of our AWS replicas to be the primary, completing our migration to the cloud.

In February 2020, just before COVID hit in the U.S., our team met and agreed to make our final push to move Postmark fully into AWS. We named this effort “PAWS” (Postmark on AWS), and we were all-in.

Over the preceding 20 months, we had whittled down most of the traffic to our primary MySQL system and developed a much clearer understanding of whether the system was behaving normally. This allowed us to build out the final bits to make the final cutover to AWS with confidence.

The PAWS project encompassed building out independent clusters for our service bus (RabbitMQ), Mail Processing Services, APIs, and Web app, and finally, promoting our AWS SQL cluster to serve as the primary cluster.

The team was tasked with the following work to prepare for this cutover:

- Add “region-awareness” to the components (more on this in a moment).

- Define additional health and performance metrics for any services being migrated.

- Ensure new clusters were addressable from our integration tests so that we could compare performance/behavior/stability between our existing systems and AWS.

- Prepare tooling and recovery procedures for the cutover event.

Building “region-awareness” into our services primarily meant that services were enhanced to communicate with resources based on “regional” URLs. Practically, this could mean that those URLs were pointed at resources in our co-located data center, the AWS region, or a proxy in between.

This allowed us to easily route traffic between our two data centers through the use of weighted DNS and load balancers. We see this frequently with cloud providers that include us-east-2, eu-west-1, and so forth in the hostname of their APIs; this is a powerful way to isolate and route traffic at any scale.

Region-awareness also allowed us to navigate directly to the new public endpoints in both data centers to test their behavior and performance, ahead of routing customer traffic to those new systems. Having all clusters “live” and being able to blend traffic to one or the other was critical in avoiding a prolonged and risky “stop-the-world” cutover.

During this time, we also refactored our Mail Processing Service (i.e. the code that actually processes and delivers mail in Postmark) so that it could operate independently in either data center. This gave us a new capability: multi-region mail processing, and with small enhancements, the ability to isolate broadcast traffic from transactional mail, which was a critical key to success of our Message Streams release. Having an independent sending cluster that we could load test and validate ahead of the final failover gave us a huge confidence boost that our core functionality would have minimal disruption when the final failover happened.

Having full control over routing to our primary SQL instances, high confidence in our updated sending infrastructure, and our incremental approach to moving components to AWS, we were able to minimize the number of changes we’d need to apply during our cutover.

After about 4 months of work, we scheduled the failover of our primary databases for Saturday, July 11th, 2020.

The big event #

In preparation for the big event, we took a few final actions in the weeks leading up to the cutover:

- We slowly shifted customer traffic from our co-located data center to the new APIs and sending clusters in AWS. This was accomplished mainly with weighted DNS, with sensitivity to any increased error rates or latency that our customers might experience. We were able to see that our systems were scaled properly and that the core functionality (mail processing) would work perfectly.

- We developed a detailed checklist that included the assigned engineer and the time frame for executing the step. This also included pre- and post-event activities that our Product and Customer Success teams would need to complete.

- Our team was encouraged to script their tasks out, and have a manual backup plan if the automation for their tasks failed.

- We ensured each person participating had a clear set of metrics to “certify” that their components were functioning properly post-failover.

- We reviewed the checklist in advance and did a “dry run” on the Thursday before. This live rehearsal allowed us to discuss ordering and dependencies, gave our Customer Success team a sense of what would be impacted during the cutover, and allowed our team to develop a shared sense of pacing for the actual event.

In reviewing our status history for that day, you’ll see that the years of slow progress and preparation paid off. We were able to start the event on time, and even with some minor hiccups, we were able to complete the cutover within an hour with no disruption to our API, and within the limits for planned delays for processing.

Denouement #

While our work to migrate Postmark to AWS has been largely successful, we haven’t yet achieved all of the goals we listed in the beginning. We still have areas we’d like to improve, and not everything is idempotent or adheres to 12FA. Ideas we had when we started evolved and disappeared as we peeled the onion.

But: We followed through on many of the big goals listed earlier, and we’ve seen those improvements have a cumulative positive impact on our day-to-day operations.

The migration of Postmark to AWS was a huge milestone that allowed us to modernize core services and positioned Postmark for growth over the years to come. As a result of the migration, we can dynamically scale many of our services and data stores, and this has led to even higher reliability and availability of Postmark endpoints and processing, as well as more consistent and predictable performance for customers.

There are many strategies for evolving and improving software. For our team, incremental, sometimes slow, progress allowed us to improve the reliability and operability of Postmark, in a way that was transparent to customers. This approach to improving software requires fortitude from the team and patience from stakeholders, but allowed us to prioritize reducing stress for the team and delivering an incredibly reliable product to our customers.

PS: does this sound like the kind of thing you like to do, too?

Then check out our careers page. Our Engineering team is growing!