Making AI assistants better at email: Postmark's new documentation tooling

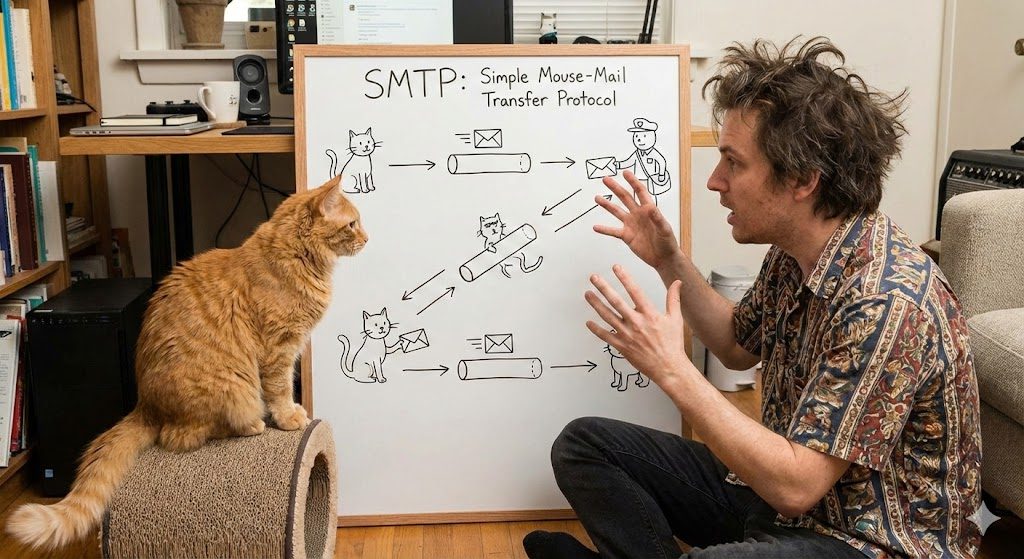

Getting an AI assistant to help with your email integration shouldn't feel like explaining SMTP to your cat. But until recently, that's kind of what it was like - AI tools could tell you about email APIs, but they often got the details wrong or gave you outdated information.

We've been thinking about this problem, and we've shipped three updates to make AI assistants genuinely useful when you're working with Postmark.

What we built #

1. llms.txt: Context for AI assistants #

We published an llms.txt file that gives AI tools like ChatGPT and Claude accurate, structured information about Postmark's API, features, and best practices.

Instead of AI assistants guessing or making assumptions about our documentation, they can now reference this file to give you correct details about authentication, endpoints, message streams, deliverability features, and integration patterns.

How to use it: When you need help with Postmark integration, troubleshooting, or understanding specific features, paste https://postmarkapp.com/llms.txt into your AI tool. Your assistant will have the context it needs to provide better, more accurate guidance.

2. Pre-built AI prompts #

We added an AI prompts section to our documentation with ready-to-use prompts for common integration tasks.

Each prompt is designed to generate production-ready code that includes error handling, best practices, and proper Postmark API usage. Copy a prompt, paste it into your AI tool, and get working code.

Available prompts:

- Node.js email integration

- Rails with ActionMailer setup

- Laravel Mail configuration

- Password reset flows

- Event-driven notification systems

- Inbound email processing

- Better Auth integration

You'll save time - no more documentation deep-dives just to send a welcome email. You'll also avoid common mistakes since the prompts include our recommendations for deliverability, security, and reliability.

3. Postmark MCP server: AI assistants that actually do things #

Earlier this year, we launched our Model Context Protocol (MCP) server, an experimental project from Postmark Labs. If you've ever asked an AI assistant about email and gotten a response that was close but not quite right, you know the frustration. AI tools have been like carrier pigeons trying to understand the internet—they know messages need to be delivered, but they're missing the whole digital infrastructure part.

The MCP server changes that by giving AI assistants a direct connection to your Postmark account. Instead of just talking about how to send emails, they can actually send them.

The MCP server currently lets you:

- Analyze email delivery statistics for specific time periods and tags

- Send individual emails with full control over the template and dynamic content

- Use your existing Postmark templates

- List available templates

It's early access, which means we're sharing it while we build. The current version works with a single Postmark server, and we're actively looking for feedback on what to add next. Multi-server support? Bounce handling automation? Advanced analytics queries? Tell us what would help your workflow.

We built this with Postmark's security standards—your server token stays in your local environment, all operations are logged, and no email content gets stored by the MCP layer.

Why this matters #

AI assistants are becoming part of how developers work (not necessarily replacing your expertise!) but handling the tedious parts so you can focus on what actually matters. The problem is, they're only useful if they have accurate information and the ability to take action.

Email is critical infrastructure for your application. When your AI assistant gives you wrong information about authentication, suggests deprecated endpoints, or can't actually help you test that password reset flow, you end up debugging issues that shouldn't exist in the first place. The result? Less time searching docs, fewer integration mistakes, and an AI assistant that can actually help you ship faster.

Give it a try #

Start with the AI prompts if you're building a new integration - they're the fastest way to get working code. When you need more specific help, reference our llms.txt file in your AI conversations. And if you want to experiment with AI assistants that can actually send emails and check stats, try the MCP server.

As always, we'd love to hear what you think. What other AI tooling would help your workflow? What Postmark tasks would you like to delegate to an assistant? Let us know.